Today’s post is brought to you by the letter P and the number .05. That’s right, we’re going to dig into what it really means to be “statistically significant.”

Setting the stage…imagine you wanted to educate primary care physicians about a guideline update for the management of hypertension. To do so, envision a CME roadshow of one-hour dinner meetings hitting all the major metros in the U.S. And to determine whether you had any impact on awareness…how about a case-based, pre- vs. post-activity survey of participants?

Once all the data are collected, you tabulate a score (% correct) for each case-based question (ideally matched for each learner) from pre to post. Now…moment of truth, you pick the appropriate statistical test of significance, say a short prayer, and hit “calculate.” The great hope being that your P values will be less than .05. Because…glory be! That’s statistical significance! So let’s take this scenario to its hopeful conclusion. What does it really mean when we say “statistical significance”?

Maybe not quite what you thought.

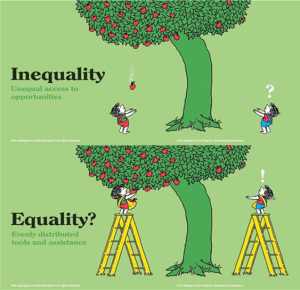

You see, statistical tests of significance (e.g., chi-square, t-test, Wilcoxon signed-rank, McNemar’s) are hypothesis tests. And the hypothesis (or expectation) is that the two comparison groups are the same. In this case, the hypothesis is that the pre- and post-activity % correct (for each question) of your CME participants are equivalent. So when you cross the threshold into “statistical significance,” you’re not saying, “Hey, these groups are different!” Instead, you’re saying, “Hey, these groups are supposed to be the same, but the data don’t support that expectation!” Which, if said quickly, sounds like the same thing…but there’s a very important distinction. Statistical tests of significance do not test whether two groups are different, they test whether two groups are the same. You may jump to the conclusion that if they aren’t the same, they must be different, but statistically, you have no evidence to that point.

Yes, that is confusing, which is probably why it gets glossed over in so many reports of CME outcomes. In actuality, you should think of P value as a sniff test. If you expect every flower to smell like a rose, P value can tell you whether it does, but if P is < .05 (indicating the data don’t support that expectation), you can’t make any assumption about the flower’s true scent. You’d need other tests to isolate the actual smell. Same thing with our CME example…a P < .05 indicates that we can’t confirm the expectation that pre- and post-activity % correct for a given question are equivalent, but it doesn’t tell us that pre and post are different in any substantive way. It’s simply a threshold test…if we find statistical significance, the correct interpretation should be, “Hey, I didn’t expect that, we should look into this further.” And that’s when other tests come into play (e.g., effect size).

In summary, P value is not an endpoint. Be wary of any outcome data punctuated solely by P values. Hence, the image for this post: are you really getting what you expected from P values?